OpenWayback 2.3.0 was released about a month ago. It was a modest release aimed to fixing a number of bugs and introducing a few minor features. Currently, work is focused on version 3.0.0, which is meant to be a much more impactful release.

Notably, the indexing function is being moved into a separate (bundled) entitiy, called the CDX Server. This will provide a clean separation of concerns between resource resolution and the user interface.

The CDX Server had already been partially implemented. But as work begin on refining that implementation it became clear that we would also need to examine very close the API between these two discreet parts. The API that currently exists is incomplete and at times vague and even, on occasion, contradictory. Existing documentation (or code review) may tell you what can be done, but it fails to shed much light on why you'd do that.

This isn't really surprising for an API that was developed "bottom-up", guided by personal experience and existing (but undocumented!) code requirements. This is pretty typical of what happens when delivering something functional is the first priority. It is a technical debt, but one you may feel is acceptable when delivering a single customer solution.

The problem we face, is that once we push for the CDX server to become the norm, it wont be a single costumer solution. Changes to the API will be painful and, consequently, rare.

So, we've had to step back and examine the API with a critical eye. To do this we've begun to compile a list of use cases that the CDX Server API needs to meet. Some are fairly obvious, others are perhaps more wishful thinking. In between there are a large number of corner cases and essential but non-obvious uses cases that must be addressed.

We welcome and encourage any input on this list. You can do so other be editing the wiki page, by commenting on the relevant issue in our tracker or sending an e-mail to the OpenWayback-dev mailing list.

To be clear, the purpose of this is primarily to ensure that we fully support existing use cases. While we will consider use cases that the current OpenWayback can not handle, they will, necessarily be ascribed a much lower priority.

Hopefully, this will lead to a fairly robust API for a CDX Server. The use cases may also allow us to firm up the API of the wayback URL structure itself. Not only will this serve the OpenWayback project, getting this API rights is very important to facilitate alternative replay tools!

As I said before, we welcome any input you may have on the subject. If you feel unsure of how to become engaged, please feel free to contact me directly.

February 15, 2016

February 3, 2016

How to SURT IDN domains?

Converting URLs to SURT (Sort-friendly URI Reordering Transform) form has many benefits in web archiving and is widely used when configuring crawlers. Notably, the use of SURT prefixes can be used to easily apply rules to a logical segment of the web.

Thus the SURT prefix:

A bit less known ability is to match against partial domain names. Thus the following SURT prefix:

This all works quite well, until you hit Internationalized Domain Names (IDNs). As the original infrastructure of the web does not really support non-ASCII characters, all IDNs are designed so that they can be translated into an ASCII equivalent.

Thus the IDN domain landsbókasafn.is is actually represented using the "punycode" representation xn--landsbkasafn-5hb.is.

When matching SURTs against full domain names (trailing comma), this doesn't really matter. But, when matching against a domain name prefix, you run into an issue. Considering the example above, should landsbókasafn.is match the SURT http://(is,l?

The current implementation (at least in Heritrix's much used SurtPrefixedDecideRule) is to evaluate only the punycode version (so no, but it would match http://(is,x).

This seems potentially limiting and likely to cause confusion.

Thus the SURT prefix:

http://(is,Will match all domains under the .is TLD.

A bit less known ability is to match against partial domain names. Thus the following SURT prefix:

http://(is,aWould match all .is domains that begin with the letter a (note that there isn't a comma at the end).

This all works quite well, until you hit Internationalized Domain Names (IDNs). As the original infrastructure of the web does not really support non-ASCII characters, all IDNs are designed so that they can be translated into an ASCII equivalent.

Thus the IDN domain landsbókasafn.is is actually represented using the "punycode" representation xn--landsbkasafn-5hb.is.

When matching SURTs against full domain names (trailing comma), this doesn't really matter. But, when matching against a domain name prefix, you run into an issue. Considering the example above, should landsbókasafn.is match the SURT http://(is,l?

The current implementation (at least in Heritrix's much used SurtPrefixedDecideRule) is to evaluate only the punycode version (so no, but it would match http://(is,x).

This seems potentially limiting and likely to cause confusion.

January 29, 2016

Things to do in Iceland ...

... when you are not in a conference center

It is clear that many attendees at the IIPC GA and web archiving conference in Reykjavík next April (details) plan to extend their stay. Several have contacted me for advice on what not to miss. So, I figured I'd write something up for all to see.

The following is far from exhaustive or authoritative. It largely reflects my personal taste and may have glaring omissions. It also probably reflects my memory as well!

Reykjavík

Downtown Reykjavík has many interesting sights, museums and attractions. Not to mention shops, bars and restaurants. Of particular note is Hallgrímskirkja which rises up over the heart of city and right outside it is the statue of Leif Eriksson. The Pearl dominates the skyline a bit further south, there are stunning views to be had from its observation deck. In the heart of the city you'll find Alþingishúsið (Parliment building), city hall, the old harbor, Harpa (concert hall) and many other notable buildings and sights.A bit further afield you'll find Höfði and the Sólfar sculpture. Sólfarið is without a question my favorite public sculpture, anywhere.

There are a large number of museums in Reykjavík. Ranging from the what-you'd-expect to the downright-weird.

Thanks to abundant geothermal energy, you'll find heated, open air, public swimming pools open year round. I highly recommend a visit to one of them. Reykjavík's most prominent public pool is Laugardalslaug.

Lastly, the most famous eatery in Iceland is in the heart of the city, and worth a visit, Bæjarins Beztu.

Out of Reykjavík

Just south of Reykjavík (15 minutes), in my hometown of Hafnarfjörður, you'll find a Viking Village!A bit further there is the Blue Lagoon. I highly recommend everyone try it at least once. Do note that you may need to book in advance!

And just a bit further still, you'll find the Bridge Between Continents! Okay, so the last one is a bit overwrought, but still a fun visit if you're at all into plate tectonics.

Whale watching tours are operated out of Reykjavík, even in April. You'll want some warm clothes if you go on one of those!

Probably the most popular (for good reasons) day tour out of Reykjavík is the Golden Circle. It covers Geysir, Gullfoss (Iceland's largest waterfall) and Þingvellir (the site of the original Icelandic parliament, formed in 930, it is a UNESCO World Heritrage Site). You can do the circle in a rented car and there are also numerous tour operators offering this tip and variations on it.

Driving along the southern coast on highway 1, you'll also come across many interesting town and sights. It is possible to drive as far as Jökulsárlón and back in a single day. Stopping at places like Selfoss, Vík í Mýrdal, Seljalandsfoss, Skógarfoss and Skaftafell National Park. It may be better to plan an overnight stop, however. This is an ideal route for a modest excursion as there are so many interesting sights right by the highway.

Further afield

For longer trips outside of the capitol there are too many options to count. You could go to Vestmannaeyjar, just off the south coast, or to Akureyri, the capitol of the north. A popular trip is to take highway 1 around the country (it loops around). Such a trip can be done in 3-4 days, but you'd need closer to a week to fully appreciate all the sights along the way.January 28, 2016

To ZIP or not to ZIP, that is the (web archiving) question

Do you use uncompressed (W)ARC files?

It is hard to imagine why you would want to store the material uncompressed. After all, web archives are big. Compression saves space and space is money.

While this seems straightforward it is worth examining some of the assumptions made here and considering what trade-offs we may be making.

Lets start by consider that a lot of the files on the Internet are already compressed. Images, audio and video files as well as almost every file format for "large data" is compressed. Sometimes simply by wrapping everything in a ZIP container (e.g. EPUB). There is very little additional benefit gained from compressing these files again (it may even increase the size very slightly).

For some, highly specific crawls, it is possible that compression will accomplish very little.

But it is also true that compression costs very little. We'll get back to that point in a bit.

For most general crawls, the amount of HTML, CSS, JavaScript and various other highly compressible material will make up a substantial portion of the overall data. Those files may be smaller, but there are a lot more of them. Especially, automatically generated HTML pages and other crawler traps that are impossible to avoid entirely.

In our domain crawls, HTML documents alone typically make up around a quarter of the total data downloaded. Given that we then deduplicate images, videos and other, largely static, file formats, HTML files' share in the overall data needing to be stored is even greater. Typically approaching half!

Given that these text files compress heavily (by 70-80% usually), tremendous storage savings can be realized using compression. In practice, our domain crawls compressed size is usually about 60% of the uncompressed size (after deduplication).

More frequently run crawls (with higher levels of deduplication) will benefit even more. Our weekly crawls' compressed size is usually closer to 35-40% of the uncompressed volume (after deduplication discards about three quarters of the crawled data).

So you can save anywhere from ten to sixty percent of the storage needed, depending on the types of crawling you do. But at what cost?

On the crawler side the limiting factor is usually disk or network access. Memory is also sometimes a bottleneck. CPU cycles are rarely an issue. Thus the additional overhead of compressing a file, as it is written to disk, is trivial.

On the access side, you also largely find that CPU isn't a limiting factor. The bottleneck is disk access. And here compression can actually help! It probably doesn't make much difference when only serving up one small slice of a WARC but when processing entire WARCs it will take less time to lift them off of the slow HDD if the file is smaller. The additional overhead of decompression is insignificant in this scenario except in highly specific circumstances where CPU is very limited (but why would you process entire WARCs in such an environment).

So, you save space (and money!) and performance is barely affected. It seems like there is no good reason to not compress your (W)ARCs.

But, there may just be one, HTTP Range Requests.

To handle an HTTP Range Request a replay tool using compressed (W)ARCs will have to access a WARC record and then decompress the entire payload (or at least from start and as far as needed). If uncompressed, the replay tool could simply locate the start of the record and then skip the required number of bytes.

This only affects large files and is probably most evident when replaying video files. User's may wish to skip ahead etc. and that is implemented via range requests. Imagine the benefit when skipping to the last few minutes of a movie that is 10 GB on disk!

Thus, it seems to me that a hybrid solution may be the best course of action. Compress everything except files whose content type indicates an already compressed format. Configure it to compress when in doubt. It may also be best to compress files under a certain size threshold due to headers being compressed. That would need to be evaluated.

Unfortunately, you can't alternate compressed and uncompressed records in a GZ file such as (W)ARC. But it is fairly simple to configure the crawler to use separate output files for these content types. Most crawls generate more than one (W)ARC anyway.

Not only would this resolve the HTTP Range Request issue, it would also avoid a lot of pointless compression/uncompression work being done.

It is hard to imagine why you would want to store the material uncompressed. After all, web archives are big. Compression saves space and space is money.

While this seems straightforward it is worth examining some of the assumptions made here and considering what trade-offs we may be making.

Lets start by consider that a lot of the files on the Internet are already compressed. Images, audio and video files as well as almost every file format for "large data" is compressed. Sometimes simply by wrapping everything in a ZIP container (e.g. EPUB). There is very little additional benefit gained from compressing these files again (it may even increase the size very slightly).

For some, highly specific crawls, it is possible that compression will accomplish very little.

But it is also true that compression costs very little. We'll get back to that point in a bit.

For most general crawls, the amount of HTML, CSS, JavaScript and various other highly compressible material will make up a substantial portion of the overall data. Those files may be smaller, but there are a lot more of them. Especially, automatically generated HTML pages and other crawler traps that are impossible to avoid entirely.

In our domain crawls, HTML documents alone typically make up around a quarter of the total data downloaded. Given that we then deduplicate images, videos and other, largely static, file formats, HTML files' share in the overall data needing to be stored is even greater. Typically approaching half!

Given that these text files compress heavily (by 70-80% usually), tremendous storage savings can be realized using compression. In practice, our domain crawls compressed size is usually about 60% of the uncompressed size (after deduplication).

More frequently run crawls (with higher levels of deduplication) will benefit even more. Our weekly crawls' compressed size is usually closer to 35-40% of the uncompressed volume (after deduplication discards about three quarters of the crawled data).

So you can save anywhere from ten to sixty percent of the storage needed, depending on the types of crawling you do. But at what cost?

On the crawler side the limiting factor is usually disk or network access. Memory is also sometimes a bottleneck. CPU cycles are rarely an issue. Thus the additional overhead of compressing a file, as it is written to disk, is trivial.

On the access side, you also largely find that CPU isn't a limiting factor. The bottleneck is disk access. And here compression can actually help! It probably doesn't make much difference when only serving up one small slice of a WARC but when processing entire WARCs it will take less time to lift them off of the slow HDD if the file is smaller. The additional overhead of decompression is insignificant in this scenario except in highly specific circumstances where CPU is very limited (but why would you process entire WARCs in such an environment).

So, you save space (and money!) and performance is barely affected. It seems like there is no good reason to not compress your (W)ARCs.

But, there may just be one, HTTP Range Requests.

To handle an HTTP Range Request a replay tool using compressed (W)ARCs will have to access a WARC record and then decompress the entire payload (or at least from start and as far as needed). If uncompressed, the replay tool could simply locate the start of the record and then skip the required number of bytes.

This only affects large files and is probably most evident when replaying video files. User's may wish to skip ahead etc. and that is implemented via range requests. Imagine the benefit when skipping to the last few minutes of a movie that is 10 GB on disk!

Thus, it seems to me that a hybrid solution may be the best course of action. Compress everything except files whose content type indicates an already compressed format. Configure it to compress when in doubt. It may also be best to compress files under a certain size threshold due to headers being compressed. That would need to be evaluated.

Unfortunately, you can't alternate compressed and uncompressed records in a GZ file such as (W)ARC. But it is fairly simple to configure the crawler to use separate output files for these content types. Most crawls generate more than one (W)ARC anyway.

Not only would this resolve the HTTP Range Request issue, it would also avoid a lot of pointless compression/uncompression work being done.

November 12, 2015

Workshop on Missing Warc Features

Yesterday I tweeted:

The idea for this session came to me while reviewing the various issues set forward for the WARC 1.1 review. Several of the issues/proposals were clearly important as they addressed real needs but at the same time they were nowhere near ready for standardization.

It is important that before we enshrine a solution in the standard that we mature it. This may include exploring multiple avenues to resolve the matter. At minimum it requires implementing it in the relevant tools as proof of concept.

The issues in question include:

I'd like to organize a workshop during the 2016 IIPC GA to discuss these issues. For that to become a reality I'm looking for people willing to present on one or more of these topics (or a related one that I missed). It will probably be aimed at the open days so attendance is not strictly limited to IIPC members.

The idea is that we'd have a ~10-20 minute presentation where a particular issues's problems and needs were defined and a solution proposed. It doesn't matter if the solution has been implemented in code. Following each presentation there would then be a discussion on the merits of the proposed solution and possible alternatives.

A minimum of three presentations would be needed to "fill up" the workshop. We should be able to fit in four.

So, that's what I'm looking for, volunteers to present one or more of these issues. Or, a related issue I've missed.

To be clear, while the idea for this workshop comes out of the WARC 1.1 work it is entirely separate from that review. By the time of the GA the WARC 1.1 revision will be all but settled. Consider this, instead, as a first possible step on the WARC 1.2 revision.

Considering a session on crawl artifacts that don't yet fit in WARCs for #iipcGA16. If you're interested in helping organize, let me know.

— Kristinn Sigurðsson (@kristsi) November 11, 2015

Possible topics include screenshots, SSL certs, HTTP 2.X and AJAX interactions. #iipcGA16

— Kristinn Sigurðsson (@kristsi) November 11, 2015

I thought I'd expand a bit on this without a 140 character limit.The idea for this session came to me while reviewing the various issues set forward for the WARC 1.1 review. Several of the issues/proposals were clearly important as they addressed real needs but at the same time they were nowhere near ready for standardization.

It is important that before we enshrine a solution in the standard that we mature it. This may include exploring multiple avenues to resolve the matter. At minimum it requires implementing it in the relevant tools as proof of concept.

The issues in question include:

- Screenshots – Crawling using browsers is becoming more common. A very useful by product of this is a screenshot of the webpage as rendered by the browser. It is, however, not clear how best to store this in a WARC. Especially not in terms of making it easily discoverable to a user of a Wayback like replay. This may also affect other types of related resources and metadata.

See further on WARC specification issue tracker: #13 #27 - HTTP 2 – The new HTTP 2 standard uses binary headers. This seems to breaks one of the expectations of WARC response records containing HTTP responses.

See further on WARC specification issue tracker: #15 - SSL certificates – Store the SSL certificates used during an HTTPS session. #12

- AJAX Interactions – #14

I'd like to organize a workshop during the 2016 IIPC GA to discuss these issues. For that to become a reality I'm looking for people willing to present on one or more of these topics (or a related one that I missed). It will probably be aimed at the open days so attendance is not strictly limited to IIPC members.

The idea is that we'd have a ~10-20 minute presentation where a particular issues's problems and needs were defined and a solution proposed. It doesn't matter if the solution has been implemented in code. Following each presentation there would then be a discussion on the merits of the proposed solution and possible alternatives.

A minimum of three presentations would be needed to "fill up" the workshop. We should be able to fit in four.

So, that's what I'm looking for, volunteers to present one or more of these issues. Or, a related issue I've missed.

To be clear, while the idea for this workshop comes out of the WARC 1.1 work it is entirely separate from that review. By the time of the GA the WARC 1.1 revision will be all but settled. Consider this, instead, as a first possible step on the WARC 1.2 revision.

September 15, 2015

Looking For Stability In Heritrix Releases

Which version of Heritrix do you use?

If the answer is version 3.3.0-LBS-2015-01 then you probably already know where I'm going with this post and may want to skip to the proposed solution.

3.3.0-LBS-2015-01 is a version of Heritrix that I "made" and currently use because there isn't a "proper" 3.3.0 release. I know of a couple of other institutions that have taken advantage of it.

The last proper release of Heritrix (i.e. non-SNAPSHOT release that got pushed to a public Maven repo, even if just the Internet Archive one) that I could use was 3.1.0-RC1. There were regression bugs in both 3.1.0 and 3.2.0 that kept me from using them.

After 3.2.0 came out the main bug keeping me from upgrading was fixed. Then a big change to how revisit records were created was merged in and it was definitely time for me to stop using a 4 year old version. Unfortunately, stable releases had now mostly gone away. Even when a release is made (as I discovered with 3.1.0 and 3.2.0) they may only be "stable" for those making the release.

So, I started working with the "unstable" SNAPSHOT builds of the unreleased 3.3.0 version. This, however presented some issues. I bundle Heritrix with a few customizations and crawl job profiles. This is done via a Maven build process. Without a stable release, I'd run the risk that a change to Heritrix will cause my internal build to create something that no longer works. It also makes it impossible to release stable builds of tools that rely on new features in Heritrix 3.3.0. Thus no stable releases for the DeDuplicator or CrawlRSS. Both are way overdue.

Late last year, after getting a very nasty bug fixed in Heritrix, I spent a good while testing it and making sure no further bugs interfered with my jobs. I discovered a few minor flaws and wound up creating a fork that contained fixes for these flaws. Realizing I now had something that was as close to a "stable" build as I was likely to see, I dubbed it Heritrix version 3.3.0-LBS-2014-03 (LBS is the Icelandic abbreviation of the library's name and 2014-03 is the domain crawl it was made for).

The fork is still available on GitHub. More importantly, this version was built and deployed to our in-house Maven repo. It doesn't solve the issue of the open tools we have but for internal projects, we now had a proper release to build against.

You can see here all the commits the separate 3.2.0 and 3.3.0-LBS-2014-03 (there are a lot!).

Which brings us to 3.3.0-LBS-2015-01. When getting ready for the first crawl of this year I realized that the issues I'd had were now resolved, plus a few more things had been fixes (full list of commits). So, I created up a new fork and, again, put it through some testing. When it came up clean I released it internally as 3.3.0-LBS-2015-01. It's now used for all crawling at the library.

This sorta works for me. But it isn't really a good model for a widely used piece of software. The unreleased 3.3.0 version contains significant fixes and improvements. Getting people stuck on 3.2.0 or forcing them to use a non-stable release isn't good. And, while anyone may use my build, doing so requires a bit of know-how and there still isn't any promise of it being stable in general just because it is stable for me. This was clearly illustrated with the 3.1.0 and 3.2.0 releases which were stable for IA, but not for me.

Stable releases require some quality assurance.

What I'd really like to see is an initiative of multiple Heritrix users (be they individuals or institutions). These would come together, one or twice a year, create a release candidate and test it based on each user's particular needs. This would mostly entail running each party's usual crawls and looking for anything abnormal.

Serious regressions would either lead to fixes, rollback of features or (in dire cases) cancelling for the release. Once everyone signs off, a new release is minted and pushed to a public Maven repo.

The focus here is primarily on testing. While there might be a bit of development work to fix a bug that is discovered, the focus here is primarily on vetting that the proposed release does not contain any notable regressions.

By having multiple parties, each running the candidate build through their own workflow, the odds are greatly improved that we'll catch any serious issues. Of course, this could be done by a dedicated QA team. But the odds of putting that together is small so we must make do.

I'd love if the Internet Archive (IA) was party to this or even took over leading it. But, they aren't essential. It is perfectly feasible to alter the "group ID" and release a version under another "flag", as it were, if IA proves uninterested.

Again, to be clear, this is not an effort to set up a development effort around Heritrix, like the IIPC did for OpenWayback. This is just focused on getting regular stable builds released based on the latest code. Period.

If the above sounds good and you'd like to participate, by all means get in touch. In comments below, on Twitter or e-mail.

At minimum you must be willing to do the following once or twice a year:

Better still if you are willing to look into the causes of any problems you discover.

Help with admin tasks, such as pushing releases etc. would also be welcome.

At the moment, this is nothing more than an idea and a blog post. Your responses will determine if it ever amounts to anything more.

If the answer is version 3.3.0-LBS-2015-01 then you probably already know where I'm going with this post and may want to skip to the proposed solution.

3.3.0-LBS-2015-01 is a version of Heritrix that I "made" and currently use because there isn't a "proper" 3.3.0 release. I know of a couple of other institutions that have taken advantage of it.

The Problem (My Experience)

The last proper release of Heritrix (i.e. non-SNAPSHOT release that got pushed to a public Maven repo, even if just the Internet Archive one) that I could use was 3.1.0-RC1. There were regression bugs in both 3.1.0 and 3.2.0 that kept me from using them.

After 3.2.0 came out the main bug keeping me from upgrading was fixed. Then a big change to how revisit records were created was merged in and it was definitely time for me to stop using a 4 year old version. Unfortunately, stable releases had now mostly gone away. Even when a release is made (as I discovered with 3.1.0 and 3.2.0) they may only be "stable" for those making the release.

So, I started working with the "unstable" SNAPSHOT builds of the unreleased 3.3.0 version. This, however presented some issues. I bundle Heritrix with a few customizations and crawl job profiles. This is done via a Maven build process. Without a stable release, I'd run the risk that a change to Heritrix will cause my internal build to create something that no longer works. It also makes it impossible to release stable builds of tools that rely on new features in Heritrix 3.3.0. Thus no stable releases for the DeDuplicator or CrawlRSS. Both are way overdue.

Late last year, after getting a very nasty bug fixed in Heritrix, I spent a good while testing it and making sure no further bugs interfered with my jobs. I discovered a few minor flaws and wound up creating a fork that contained fixes for these flaws. Realizing I now had something that was as close to a "stable" build as I was likely to see, I dubbed it Heritrix version 3.3.0-LBS-2014-03 (LBS is the Icelandic abbreviation of the library's name and 2014-03 is the domain crawl it was made for).

The fork is still available on GitHub. More importantly, this version was built and deployed to our in-house Maven repo. It doesn't solve the issue of the open tools we have but for internal projects, we now had a proper release to build against.

You can see here all the commits the separate 3.2.0 and 3.3.0-LBS-2014-03 (there are a lot!).

Which brings us to 3.3.0-LBS-2015-01. When getting ready for the first crawl of this year I realized that the issues I'd had were now resolved, plus a few more things had been fixes (full list of commits). So, I created up a new fork and, again, put it through some testing. When it came up clean I released it internally as 3.3.0-LBS-2015-01. It's now used for all crawling at the library.

This sorta works for me. But it isn't really a good model for a widely used piece of software. The unreleased 3.3.0 version contains significant fixes and improvements. Getting people stuck on 3.2.0 or forcing them to use a non-stable release isn't good. And, while anyone may use my build, doing so requires a bit of know-how and there still isn't any promise of it being stable in general just because it is stable for me. This was clearly illustrated with the 3.1.0 and 3.2.0 releases which were stable for IA, but not for me.

Stable releases require some quality assurance.

Proposed Solution

What I'd really like to see is an initiative of multiple Heritrix users (be they individuals or institutions). These would come together, one or twice a year, create a release candidate and test it based on each user's particular needs. This would mostly entail running each party's usual crawls and looking for anything abnormal.

Serious regressions would either lead to fixes, rollback of features or (in dire cases) cancelling for the release. Once everyone signs off, a new release is minted and pushed to a public Maven repo.

The focus here is primarily on testing. While there might be a bit of development work to fix a bug that is discovered, the focus here is primarily on vetting that the proposed release does not contain any notable regressions.

By having multiple parties, each running the candidate build through their own workflow, the odds are greatly improved that we'll catch any serious issues. Of course, this could be done by a dedicated QA team. But the odds of putting that together is small so we must make do.

I'd love if the Internet Archive (IA) was party to this or even took over leading it. But, they aren't essential. It is perfectly feasible to alter the "group ID" and release a version under another "flag", as it were, if IA proves uninterested.

Again, to be clear, this is not an effort to set up a development effort around Heritrix, like the IIPC did for OpenWayback. This is just focused on getting regular stable builds released based on the latest code. Period.

Sign up

If the above sounds good and you'd like to participate, by all means get in touch. In comments below, on Twitter or e-mail.

At minimum you must be willing to do the following once or twice a year:

- Download a specific build of Heritrix

- Run crawls with said build that match your production crawls

- Evaluate those crawls, looking for abnormalities and errors compared to your usual crawls

- A fair amount of experience with running Heritrix is clearly needed.

- Report you results

- Ideally, in a manner that allows issues you uncover to be reproduced

Better still if you are willing to look into the causes of any problems you discover.

Help with admin tasks, such as pushing releases etc. would also be welcome.

At the moment, this is nothing more than an idea and a blog post. Your responses will determine if it ever amounts to anything more.

September 4, 2015

How big is your web archive?

How big is your web archive? I don't want you to actually answer that question. What I'm interested in is, when you read that question, what metric jumped into your head?

I remember long discussions on web archive metrics when I was first starting in this field, over 10 years ago. Most vividly I remember the discussion on what constitutes a "document".

Ultimately, nothing much came of any of those discussions. We are mostly left talking about number of URL "captures" and bytes stored (3.17 billion and 64 TiB, by the way). Yet these don't really convey all that much, at least not without additional context.

Another approach is to talk about websites (or seeds/hosts) captured. That still leaves you with a complicated dataset. How many crawls? How many URL per site? Etc.

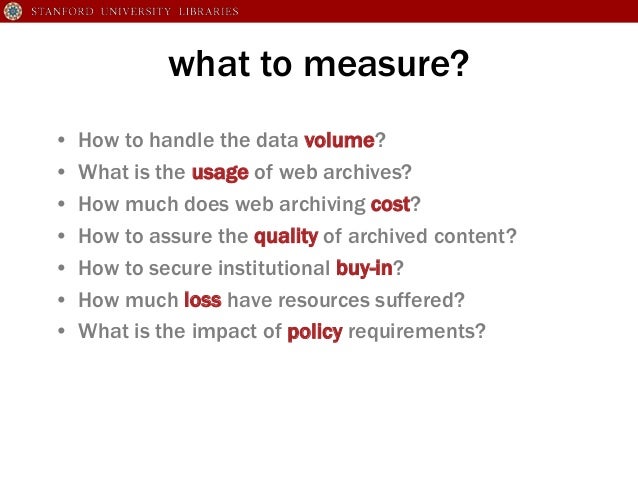

Nicholas Taylor (of Stanford University Libraries) recently shared some slides on this subject, that I found quite interesting and revived this topic in my mind.

It can be a very frustrating exercise to communicate the qualities of your web archive. If Internet Archive has 434 billion web pages and I only have 3.17 billion does that make IA's collection 137 times better?

I imagine anyone who's reading this knows that that isn't how things work. If you are after world wide coverage, IA's collection is infinitely better than mine. Conversely, for Icelandic material, the Icelandic web archive is vastly deeper than IA's.

We are, therefore, left writing lengthy reports. Detailing number of seeds/sites, URLs crawled, frequency of crawling etc. Not only does this make it hard to explain to others how good (or not good as the case may be) our web archive is. It makes it very difficult to judge it against other archives.

To put it bluntly, it leaves us guessing at just how "good" our archive is.

To be fair, we are not guessing blindly. Experience (either first hand or learned from others) provides useful yardsticks and rules of thumb. If we have resources for some data mining and quality assurance, those guesses get much better.

But it sure would be nice to have some handy metrics. I fear, however, that this isn't to be.

I remember long discussions on web archive metrics when I was first starting in this field, over 10 years ago. Most vividly I remember the discussion on what constitutes a "document".

Ultimately, nothing much came of any of those discussions. We are mostly left talking about number of URL "captures" and bytes stored (3.17 billion and 64 TiB, by the way). Yet these don't really convey all that much, at least not without additional context.

|

Measure All the (Web Archiving) Things!

- Nicholas Taylor (Stanford University Libraries) |

Nicholas Taylor (of Stanford University Libraries) recently shared some slides on this subject, that I found quite interesting and revived this topic in my mind.

It can be a very frustrating exercise to communicate the qualities of your web archive. If Internet Archive has 434 billion web pages and I only have 3.17 billion does that make IA's collection 137 times better?

I imagine anyone who's reading this knows that that isn't how things work. If you are after world wide coverage, IA's collection is infinitely better than mine. Conversely, for Icelandic material, the Icelandic web archive is vastly deeper than IA's.

We are, therefore, left writing lengthy reports. Detailing number of seeds/sites, URLs crawled, frequency of crawling etc. Not only does this make it hard to explain to others how good (or not good as the case may be) our web archive is. It makes it very difficult to judge it against other archives.

To put it bluntly, it leaves us guessing at just how "good" our archive is.

To be fair, we are not guessing blindly. Experience (either first hand or learned from others) provides useful yardsticks and rules of thumb. If we have resources for some data mining and quality assurance, those guesses get much better.

But it sure would be nice to have some handy metrics. I fear, however, that this isn't to be.

Subscribe to:

Posts (Atom)