I remember long discussions on web archive metrics when I was first starting in this field, over 10 years ago. Most vividly I remember the discussion on what constitutes a "document".

Ultimately, nothing much came of any of those discussions. We are mostly left talking about number of URL "captures" and bytes stored (3.17 billion and 64 TiB, by the way). Yet these don't really convey all that much, at least not without additional context.

|

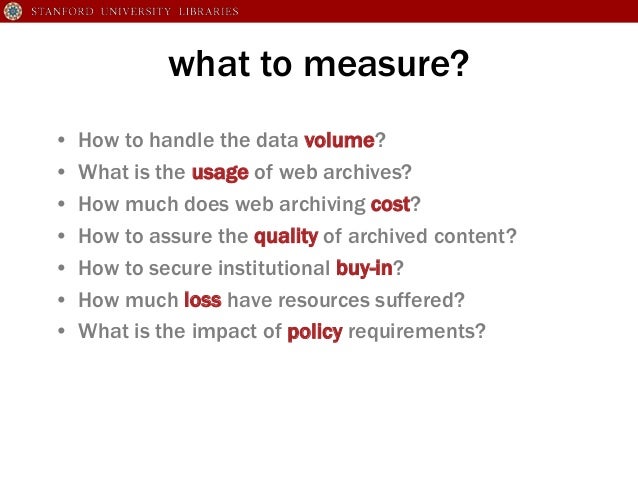

Nicholas Taylor (of Stanford University Libraries) recently shared some slides on this subject, that I found quite interesting and revived this topic in my mind.

It can be a very frustrating exercise to communicate the qualities of your web archive. If Internet Archive has 434 billion web pages and I only have 3.17 billion does that make IA's collection 137 times better?

I imagine anyone who's reading this knows that that isn't how things work. If you are after world wide coverage, IA's collection is infinitely better than mine. Conversely, for Icelandic material, the Icelandic web archive is vastly deeper than IA's.

We are, therefore, left writing lengthy reports. Detailing number of seeds/sites, URLs crawled, frequency of crawling etc. Not only does this make it hard to explain to others how good (or not good as the case may be) our web archive is. It makes it very difficult to judge it against other archives.

To put it bluntly, it leaves us guessing at just how "good" our archive is.

To be fair, we are not guessing blindly. Experience (either first hand or learned from others) provides useful yardsticks and rules of thumb. If we have resources for some data mining and quality assurance, those guesses get much better.

But it sure would be nice to have some handy metrics. I fear, however, that this isn't to be.

No comments:

Post a Comment